Welcome tech enthusiasts and curious minds! Have you ever wondered why your devices can access data so quickly and efficiently? The secret lies in cache mapping.

Cache mapping is the process of organizing and managing data storage in a cache memory system. Cache memory is an intermediary and high-speed storage area between the main memory (RAM) and the processor, where frequently accessed data is stored.

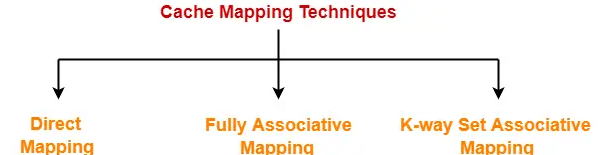

Main types of cache mapping techniques:

1.Direct Mapping

Imagine you have a shelf with many books, and each of them has its spot on the shelf. When you want to find a book, you know exactly where it is (based on its position).

This is similar to how direct mapping works in a cache. Each block of information from memory has a specific spot in the cache. When you need that data, you can quickly check its predefined spot in the cache to see if it's there.

2.Set-Associative Mapping

Picture a parking lot divided into sections, and each section can hold a few cars. You're allowed to park in any section you want, but they all have a limited number of spaces.

Set-associative mapping is similar to this. Data from memory can be stored in certain "sections" of the cache, and each section can hold a small number of data blocks. While you can choose a section to store data in it, you're limited by the number of available spaces in that specific section.

3.Fully Associative Mapping

Think of a big basket where you can put various items without any specific order. You can place the stuff anywhere in the basket without following a fixed arrangement.

Fully associative mapping is like this basket. Data from memory can be placed anywhere in the cache, without being restricted to specific locations or sections. When you need to find data, you search through the entire cache to see if the data is in there, regardless of where it's placed.